|

| Source: wikipedia |

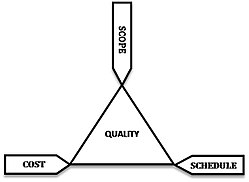

The definition of Quality, however, is often complicated, especially when there is no physical product or clear monetary benefit.

For many change / transformation teams, the metrics for a Programme is about delivery - how much can the team deliver in the next n years, despite not knowing where they are today. In this case, Q becomes Quantity.

The drive to meet a Quantity-led KPI often forces the delivery teams into shoehorning a solution by the stated deadline, whether or not the solution is scaleable to meet the enterprise needs.

Over time, the story is the same:

- functionality and usability suffer

- manual workarounds start to appear to plug the gaps

- the implemented work cannot be reused for other areas

- data is a mess.

- the original team leaves

- pulling the plug

- commissioning another project to replace (go back to paragraph above to rinse and repeat)

- turning the manual processes into work packages and shipping them off to an outsourcing outfit, the corporate world of sweeping dirt under the carpet

Transitioning

So to the main point - how can an organisation transition from a Quantity-driven mission to one that focuses on Quality?

Start by acknowledging that a Quantity-driven metric, while easy to measure, may not be the best place to start. Look at past deliveries and use the lessons learnt in them. Use these findings to define what Quality looks like to the organisation.

Ideas about Quality

Some ideas around a Quality deliverable can include:

- Is the Product a working product? Did it deliver to scope?

- Can the Product and/or it's corresponding processes and outputs be reused?

- Is the carry over defects list something that can be resolved within the next 6-12 months?

- How is it good, if people have to manually handle it?

- See also this article on how to identify bad data

And a bonus philosophical question: If the product does what it says on the box, but does not work due to bad data or other bad processes, did the project team do a good job?

How to get good Quality

- Change the metrics. Stop making it about how many - make it about how well solutions align to the enterprise architecture

- No Enterprise Architecture? Perhaps that's where the investment should go to first. Not enough budget? Put into play a business-aligned data architecture (by the way - if there is a strong Enterprise Architecture setup, many projects can be run using Agile methodologies).

- Spend more time understanding: the current status; other in-flight projects and their expected outcomes.

- If time is an absolute constraint, ensure that an enterprise solution is planned to replace any workarounds and invest in that solution for post go-live. Senior management's reduced appetite to fix the problem after implementation is understandable, but. like wet rot in a log cabin, is not good for the organisation in the long term

- Open communications with the senior owner and the project team so they can make the necessary calls to change or hold or stop projects

- Create a culture where people are rewarded for calling out issues. Additional time or costs in the short term may mean tens or even hundred times more savings in the future

- Get people to cross-pollinate ideas. Get rid of silos, especially the Us vs Them mindset of IT vs Business (Users). This is best done when there are crossovers from Business into IT. And yes, there will be an investment cost in people but it's well worth it.

Remember. the right KPIs drive the right behaviours which will, in the long run, build a stronger foundation for the organisation.